Introduction

Search optimization has evolved again. We’ve entered the era of Generative Engine Optimization (GEO), a discipline focused on ensuring your content appears in AI-generated search results, not just traditional SERPs. As AI search engines and agentic systems like ChatGPT, Gemini, and Claude start surfacing content directly, the structure and visibility of your site have never mattered more.

What Is Generative Engine Optimization

GEO is the practice of making your site readable, citable, and accessible to AI agents and large language models. It builds on the same principles as SEO but prioritizes how AI systems interpret, not just index, your content.

That means:

- Providing structured, context-rich content using schema.org

- Ensuring content is visible at page load (not hidden behind JavaScript)

- Maintaining accurate, semantically marked-up data for AI agents to use in responses

I’ve been implementing schema.org structured data for over ten years, and the trend is clear. Structured data is no longer just for Google snippets; it now determines visibility across generative AI ecosystems.

Tools for Testing GEO Readiness

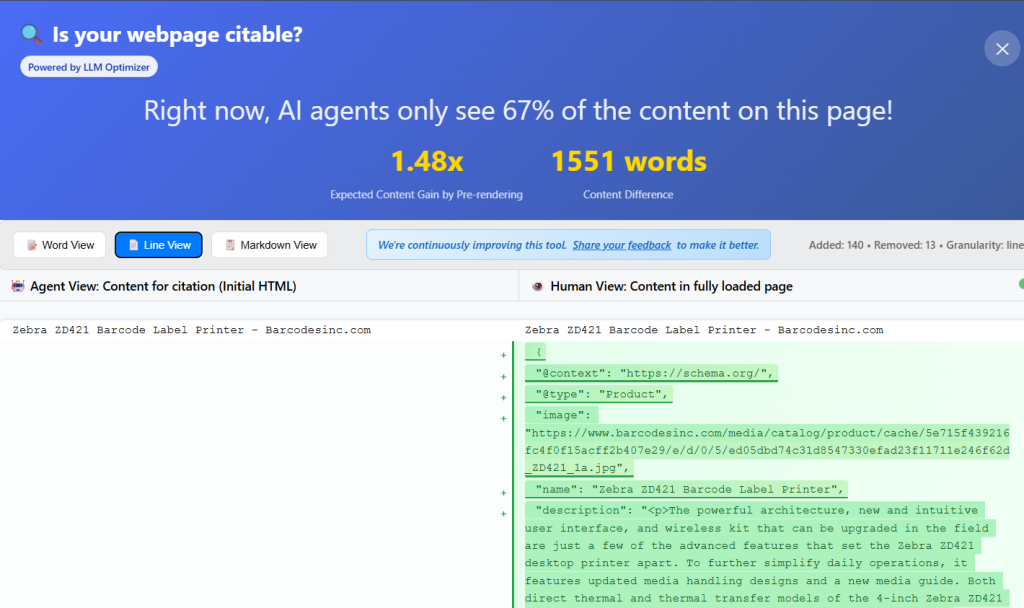

Adobe recently released the Adobe LLM Optimizer Chrome Extension, which quickly shows you how much of your content is visible to AI agents and AI search engines.

The tool provides a visual comparison between what humans see and what AI agents read. This is crucial because many modern sites rely heavily on deferred JavaScript rendering, meaning critical content may never load for an AI crawler.

Poorly structured or JS-driven pages can appear empty to bots, making your content invisible to LLMs like ChatGPT or Gemini.

How to Check What AI Agents See

Use the URL Inspection tool in Google Search Console and select “Test Live URL → View Tested Page.” From there, inspect the HTML and JavaScript console messages to identify what content was blocked or deferred during the crawl.

Of course, always pay attention to your standard Google User Agents, which vary depending on the service:

Google Search: Googlebot

Google Shopping: Storebot-Google

Google News: Googlebot-News

Google Images: Googlebot-Image

Google Video: Googlebot-VideoFor GEO and AI agents, there’s a new class of user agents you now need to also account for:

Gemini: Google-Extended

ChatGPT Search: OAI-SearchBot

ChatGPT User Actions: ChatGPT-User

Claude Search: Claude-SearchBot

Claude User Actions: Claude-User

Vertex AI Agents: Google-CloudVertexBot

OpenAI Model Training: GPTBot

Anthropic Model Training: ClaudeBot

NotebookLM / Learning Models: Google-NotebookLMReference: Google’s official list of user agents

Using Robots.txt for AI Agents

Same as before, you can control access to your content for specific AI agents with simple robots.txt declarations:

User-agent: GPTBot

Disallow: /

User-agent: ChatGPT-User

Allow: /

Sitemap: https://www.example.com/sitemap.xmlFor example, this robots.txt declaration will give you granular control, allowing generative AI ChatGPT visibility while restricting OpenAI data scraping for training.

Testing Crawl Visibility Directly in Chrome

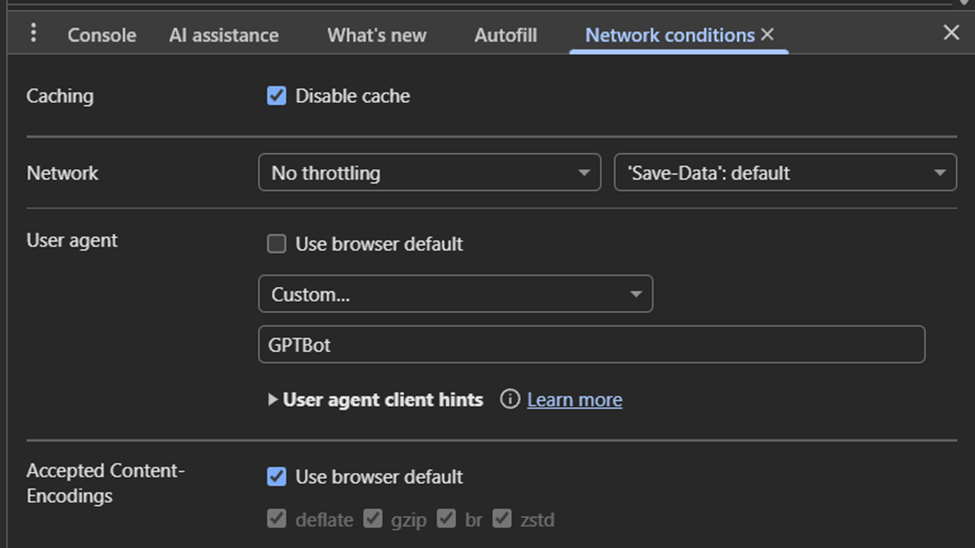

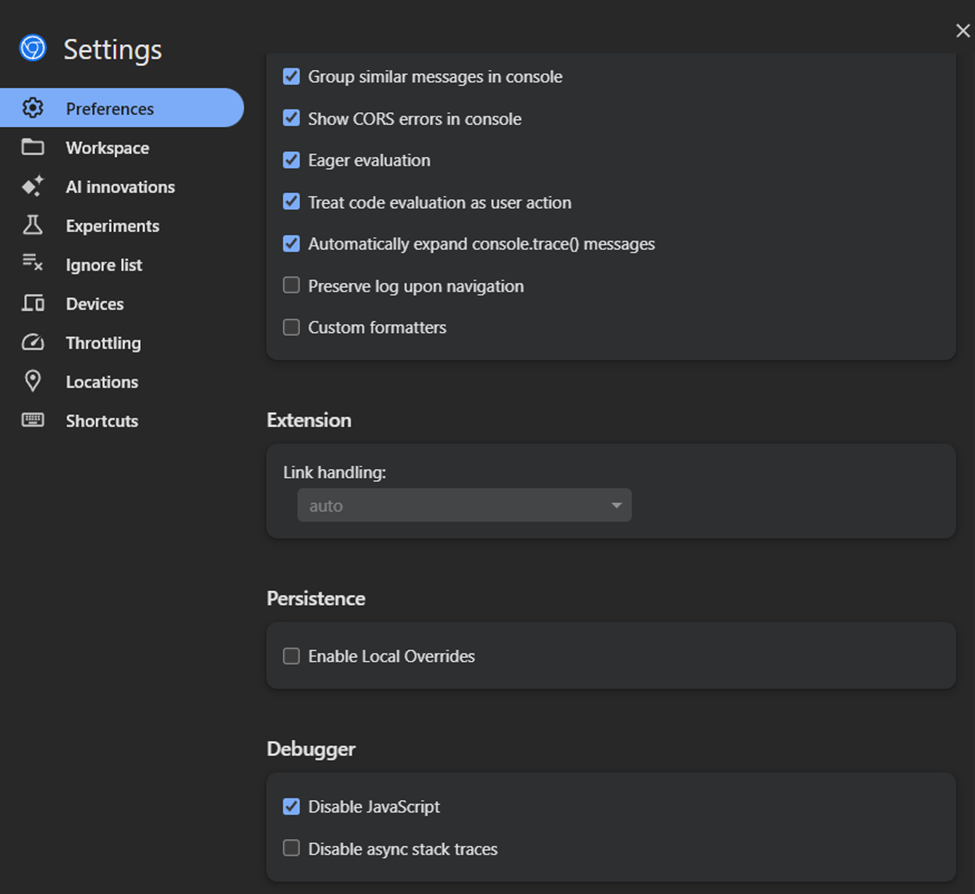

Another testing method is directly in Chrome Developer Tools.

Under Customize and Control DevTools or the “three vertical dots” > More Tools > Network Conditions, you can enter a custom User Agent String or choose from preset values.

Then under Settings > Preferences > Debugger > Disable JavaScript.

After refreshing the page, the page should reflect exactly what the crawl bot sees.

The Role of Structured Data

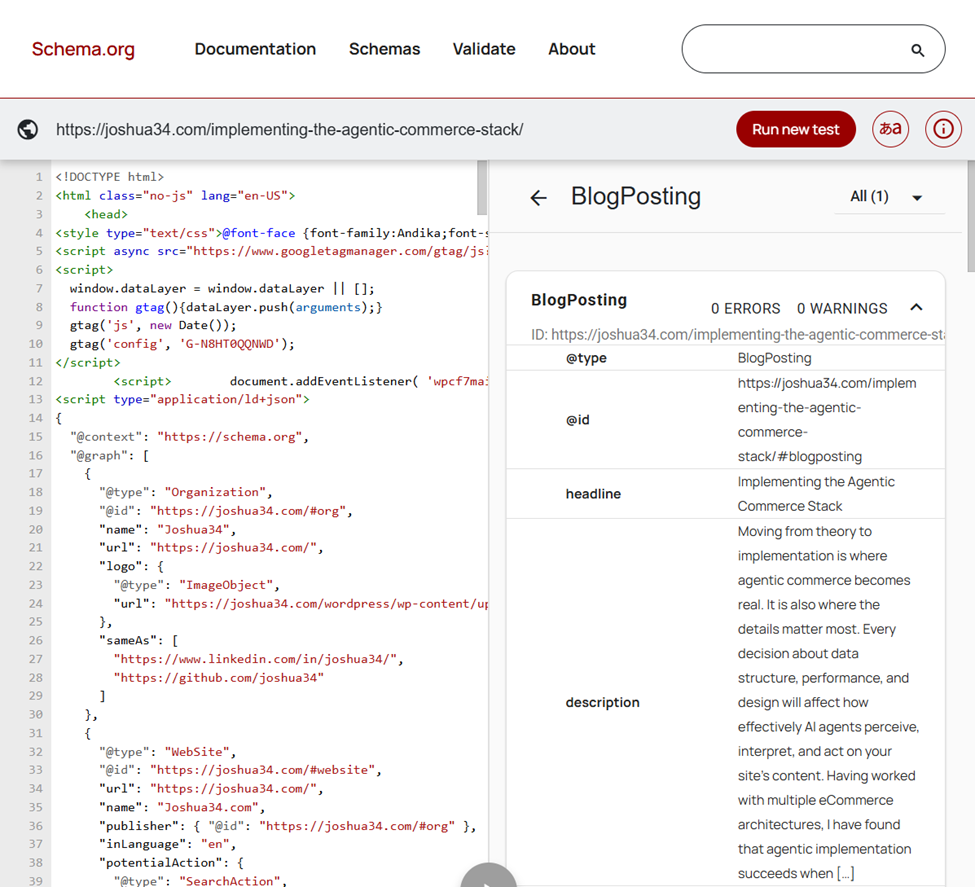

Structured data remains the foundation of digital visibility. I recommend the Schema.org Validator for comprehensive schema testing. Avoid the Google Rich Results Test, as it only validates markup eligible for visual snippets, not your full structured schema.

Structured data helps AI systems interpret product details, reviews, authorship, and organizational context, all signals they need to generate accurate, attributed answers.

For commerce sites, use, at the minimum:

- Product

- Offer

- AggregateRating

- Review

- WebSite and Organization

Optimizing for Both Humans and Crawl Bots and AI Agents

The biggest challenge in GEO is balancing performance and accessibility. My approach is to separate the content layer from the presentation layer.

Human visitors should experience fast, Core Web Vital-optimized pages. AI agents, however, should receive complete, static HTML with all key content visible immediately.

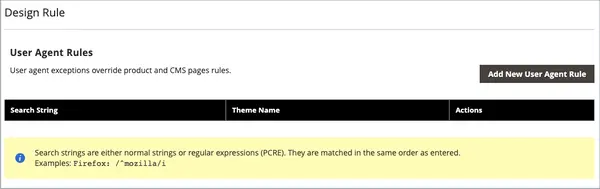

In Adobe Commerce (Magento), one effective method is to serve a lightweight child theme for AI agents and crawl bots. The goal is to remove unnecessary dependencies and render all key content via templates instead of JavaScript. This keeps the user experience optimized for visitors while ensuring crawl bots and AI systems see a full, static version of the page.

NOTE: All content must remain consistent browsing via either User-Agent and no cloaking of content should occur.

The Future of Optimization

Traditional SEO focused on search rankings. GEO focuses on whether your site is part of the answer an AI gives.

It’s no longer just about keywords. It’s about being the source AI systems cite, reference, and transact through.

The next frontier of digital visibility will be won by those who:

- Expose data through clean schema

- Ensure AI agents can read all key content

- Build fast, lightweight experiences for both humans and machines

Continue reading: From SEO to GEO: Preparing for the Agentic Web